Start your digital financial journey with specialized finance experts at the independent Financial Cloud for financial and Fintech companies. A single NVMe drive is used for storage of temporary files such as MapReduce temporary and spill files, and Spark cache. 1 disk for local logs, which can be shared with the operating system and other Hadoop logs. For Azure Storage, use AzCopy, and for Data Lake Storage use AdlCopy. Copy the hbase folder to a different Azure Storage blob container or Data Lake Storage location, and then start a new cluster with that data. The new instance is created with all the existing data.

#Hbase storage policy disk archive full#

A good option for a full board disaster recovery or compliance and auditing scenarios.

There’s no read access, but a write to only storage area.

When you put the data up there, you’re not planning to get it back out.

#Hbase storage policy disk archive archive#

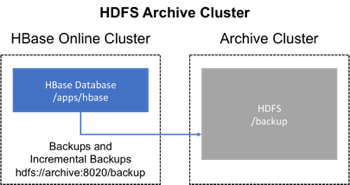

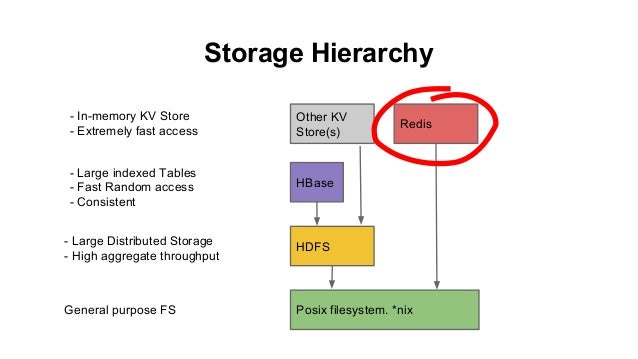

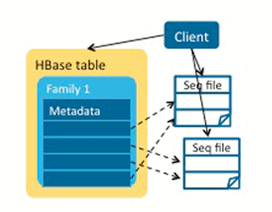

Archive Storage This is truly an archive storage option. You can integrate and manage a hybrid cloud solution that offers the advantages of cloud technology and the on-premise-based complex IT environments while minimizing system changes. 1 disk for local logs (this disk can be shared with the operating system and/or other Hadoop logs Thrift Server: 1 GB - 4 GB Set this value using the Java Heap Size of HBase Thrift Server in Bytes HBase configuration property. Create a new HDInsight instance pointing to the current storage location. This is what Microsoft uses to manage the cost of the storage. With a robust AI infrastructure and an AI solution constructed with world-class research results, we provide the most effective solution that helps with easily understanding complex business requirements. Step 1) Set up the HBase cluster The first part is to set up or pick an Apache HBase cluster to use as the storage cluster. Check whether you have configuration property in hbase-site.xml. Easily and conveniently configure your work platform according to your organization's unique characteristics and have tight communications by sharing important information anytime, anywhere. Step 1) Set up the HBase cluster Step 2) Enable the coprocessor Step 3) Create the schema for Timeline Service v.2 Each step is explained in more detail below.

0 kommentar(er)

0 kommentar(er)